There is a strong consensus that artificial intelligence (AI) will bring forth changes that will be much more profound than any other technological revolution in human history. Depending on the course that this revolution takes, AI will either empower our ability to make more informed choices or reduce human autonomy; expand the human experience or replace it; create new forms of human activity or make existing jobs redundant; help distribute well-being for many or increase the concentration of power and wealth in the hands of a few; expand democracy in our societies or put it in danger.

Europe carries the responsibility of shaping the AI revolution. The choices we face today are related to fundamental ethical issues about the impact of AI on society, in particular, how it affects labor, social interactions, healthcare, privacy, fairness and security. The ability to make the right choices requires new solutions to fundamental scientific questions in AI and human-computer interaction (HCI).

There is a need of shaping the AI revolution in a direction that is beneficial to humans both individually and societally, and that adheres to European ethical values and social, cultural, legal, and political norms.

2018 - 2022

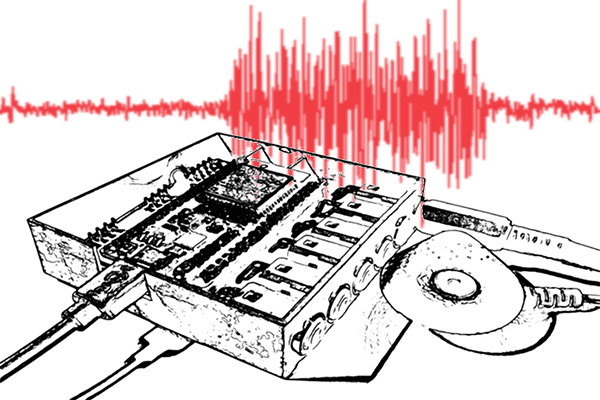

Current technical sensor systems offer capabilities that are superior to human perception.

Cameras can capture a spectrum that is wider than visible light, high-speed cameras can show movements that are invisible to the human eye,

and directional microphones can pick up sounds at long distances. The vision of this project is to lay a foundation for the creation of digital technologies

that provide novel sensory experiences and new perceptual capabilities for humans that are natural and intuitive to use.

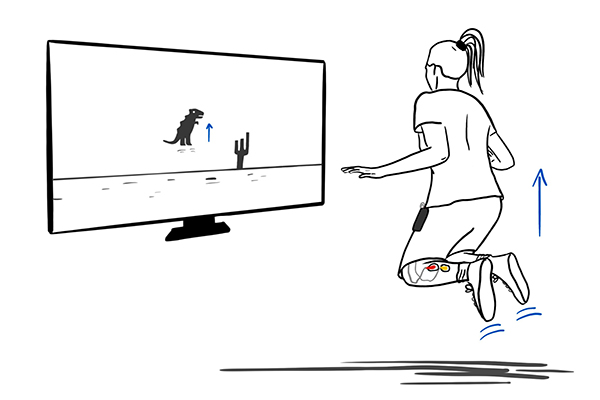

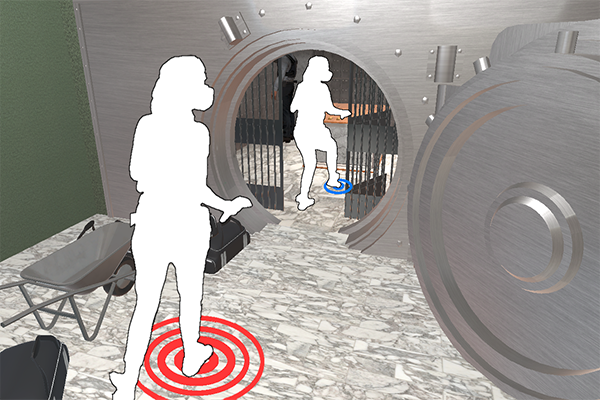

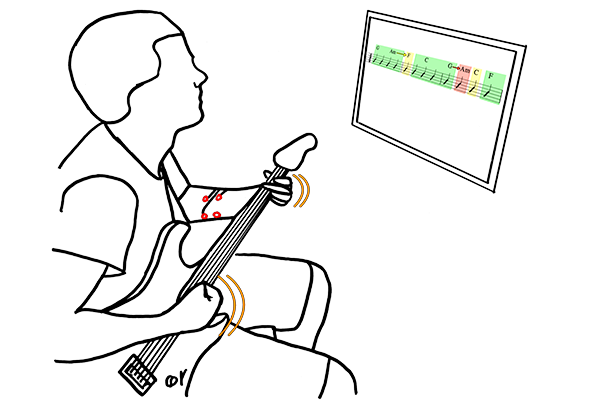

In a first step, the project will assess the feasibility of creating artificial human senses that provide new perceptual channels to the human mind,

without increasing the experienced cognitive load.

A particular focus is on creating intuitive and natural control mechanisms for amplified senses using eye gaze, muscle activity, and brain signals.

The project will quantify the effectiveness of new senses and artificial perceptual aids compared to the baseline of unaugmented perception.

The overall objective is to systematically research, explore, and model new means for increasing the human intake of information in order to lay the foundation for new and

improved human senses enabled through digital technologies and to enable artificial reflexes.

The ground-breaking contributions of this project are (1) to demonstrate the feasibility of reliably implementing amplified senses and new perceptual capabilities,

(2) to prove the possibility of creating an artificial reflex,

(3) to provide an example implementation of amplified cognition that is empirically validated, and

(4) to develop models, concepts, components, and platforms that will enable and ease the creation of interactive systems that measurably increase human perceptual capabilities. Further information are available at the corresponding website: ERC Amplify

2015 - 2017

The SFB-TRR 161 is a collaborative, interdisciplinary and research projects that connects 17 project teams of the University of Stuttgart,

University of Konstanz and the Max-Planck Institute for Biological Cybernetics, that are working in four Project Areas, three Task Forces in

the field of Visual Computing. Further information are available at the corresponding website: SFB-TRR 161.

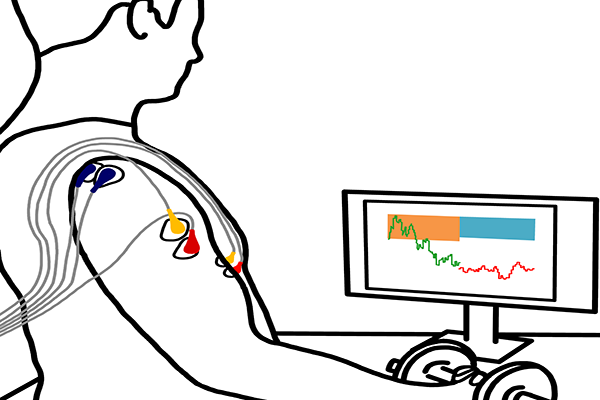

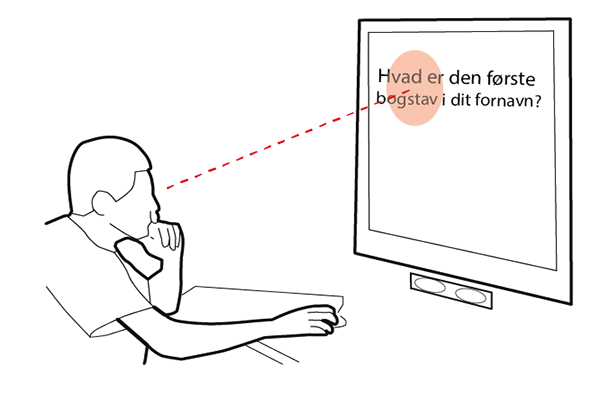

C02: Physiologically Based Sensing and Adaptive Visualization

In this project, we research new methods and techniques for cognition-aware visualizations. The basic idea is that a cognition-aware adaptive

visualization will observe the physiological response of a person while interacting with a system und use this implicit input. Electrical signals

measured on the body (e.g. EEG, EMG, ECG, galvanic skin response), changes of physiological parameters (e.g. body temperature, respiration rate, and pulse)

as well as the users’ gaze behavior are used to estimate cognitive load and understanding. Through experimental research, concepts and models for adaptive

and dynamic visualizations will be created. Frameworks and tools will be realized and empirically validated. You can find the project here.